devops-stack-module-cluster-aks

A DevOps Stack module to deploy a Kubernetes cluster on Azure AKS.

This module uses the Terraform module "aks" by Azure to in order to deploy and manage an AKS cluster. It was created in order to also manage other resources required by the DevOps Stack, such as the DNS records, resource group and subnet specific to the cluster created (these resources are helpful for the blue/green upgrading strategy). The module also provides the necessary outputs to be used by the other DevOps Stack modules.

By default, this module creates the AKS control plane and a default node pool composed of 3 nodes of the type Standard_D2s_v3. If no version is specified, the AKS cluster and node pool will be created with the latest available version.

|

The variable The Note that the versions of the node pools cannot be higher than the control plane. |

Usage

This module can be used with the following declaration:

module "aks" {

source = "git::https://github.com/camptocamp/devops-stack-module-cluster-aks.git?ref=<RELEASE>"

cluster_name = local.cluster_name

base_domain = local.base_domain

location = resource.azurerm_resource_group.main.location

resource_group_name = resource.azurerm_resource_group.main.name

virtual_network_name = resource.azurerm_virtual_network.this.name

cluster_subnet = local.cluster_subnet

kubernetes_version = local.kubernetes_version

sku_tier = local.sku_tier

rbac_aad_admin_group_object_ids = [

ENTRA_ID_GROUP_UUID,

]

depends_on = [resource.azurerm_resource_group.main]Multiple node pools can be defined with the node_pools variable:

module "aks" {

source = "git::https://github.com/camptocamp/devops-stack-module-cluster-aks.git?ref=<RELEASE>"

cluster_name = local.cluster_name

base_domain = local.base_domain

location = resource.azurerm_resource_group.main.location

resource_group_name = resource.azurerm_resource_group.main.name

virtual_network_name = resource.azurerm_virtual_network.this.name

cluster_subnet = local.cluster_subnet

kubernetes_version = local.kubernetes_version

sku_tier = local.sku_tier

rbac_aad_admin_group_object_ids = [

ENTRA_ID_GROUP_UUID,

]

# Extra node pools

node_pools = {

extra = {

vm_size = "Standard_D2s_v3"

node_count = 2

},

}

depends_on = [resource.azurerm_resource_group.main]

}Upgrading the Kubernetes cluster

From our experience, usually, enabling the auto-upgrades is a good practice, but up to a point. We recommend enabling the auto-upgrades for the control plane and the node pools.

To upgrade between minor versions, you are required to first upgrade the control plane and then the node pools. This is because the node pools cannot be of a higher version than the control plane.

|

If using the This is why we recommend leaving the |

Our recommended procedure for upgrading the cluster is as follows:

-

Ensure that the

orchestrator_versionis not set in any part of your code. -

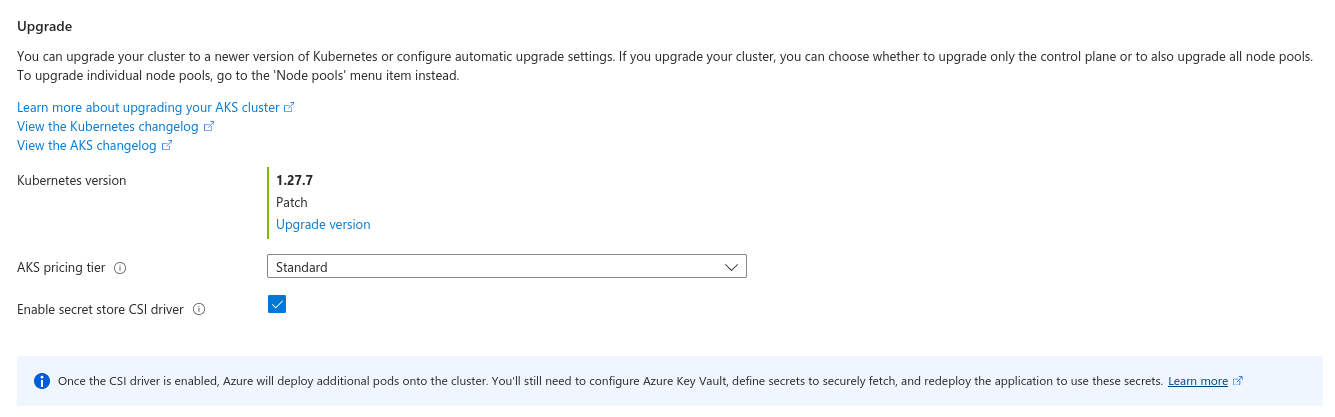

Go to the Azure portal and select your cluster. Then on the overview tab, click on the version of the control plane ans you should see a page like below. Click on Upgrade version.

-

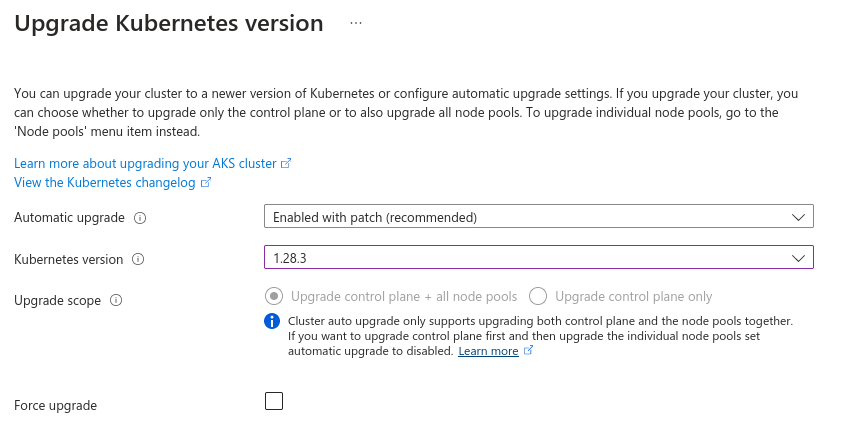

Afterwards, on the next screen, select the next minor version, make sure you’ve selected to upgrade the control plane and all the node pools, then start.

-

Wait for all the components to finish the upgrade. Then, you can set the

kubernetes_versionvariable to the minor version which you’ve just upgraded to and apply the Terraform code. This will reconcile the Terraform state with the actual state of the cluster.

Automatic cluster upgrades

You can enable automatic upgrades of the control plane and node pools by setting the automatic_channel_upgrade variable to a desired value. This will automatically upgrade the control plane and node pools to the latest available version given the constraints you defined in said variable. You can also specify the maintenance_window variable to set a maintenance window for the upgrades.

An example of this settings is as follows:

automatic_channel_upgrade = "patch"

maintenance_window = {

allowed = [

{

day = "Sunday",

hours = [22, 23]

},

]

not_allowed = []

}You can also set the node_os_channel_upgrade variable and maintenance_window_node_os variables to upgrade the Kubernetes Cluster Nodes' OS Image.

Technical Reference

Resources

The following resources are used by this module:

-

azurerm_dns_cname_record.this (resource)

-

azurerm_resource_group.this (resource)

-

azurerm_subnet.this (resource)

-

azurerm_dns_zone.this (data source)

Required Inputs

The following input variables are required:

base_domain

Description: The base domain used for Ingresses. If not provided, nip.io will be used taking the NLB IP address.

Type: string

location

Description: The location where the Kubernetes cluster will be created along side with it’s own resource group and associated resources.

Type: string

resource_group_name

Description: The name of the common resource group (for example, where the virtual network and the DNS zone resides).

Type: string

virtual_network_name

Description: The name of the virtual network where to deploy the cluster.

Type: string

cluster_subnet

Description: The subnet CIDR where to deploy the cluster, included in the virtual network created.

Type: string

Optional Inputs

The following input variables are optional (have default values):

dns_zone_resource_group_name

Description: The name of the resource group which contains the DNS zone for the base domain.

Type: string

Default: "default"

sku_tier

Description: The SKU Tier that should be used for this Kubernetes Cluster. Possible values are Free and Standard

Type: string

Default: "Free"

kubernetes_version

Description: The Kubernetes version to use on the control-plane.

Type: string

Default: "1.28"

automatic_channel_upgrade

Description: The upgrade channel for this Kubernetes Cluster. Possible values are patch, rapid, node-image and stable. By default automatic-upgrades are turned off. Note that you cannot specify the patch version using kubernetes_version or orchestrator_version when using the patch upgrade channel. See the documentation for more information.

Type: string

Default: null

maintenance_window

Description: Maintenance window configuration of the managed cluster. Only has an effect if the automatic upgrades are enabled using the variable automatic_channel_upgrade. Please check the variable of the same name on the original module for more information and to see the required values.

Type: any

Default: null

node_os_channel_upgrade

Description: The upgrade channel for this Kubernetes Cluster Nodes' OS Image. Possible values are Unmanaged, SecurityPatch, NodeImage and None.

Type: string

Default: null

maintenance_window_node_os

Description: Maintenance window configuration for this Kubernetes Cluster Nodes' OS Image. Only has an effect if the automatic upgrades are enabled using the variable node_os_channel_upgrade. Please check the variable of the same name on the original module for more information and to see the required values.

Type: any

Default: null

network_policy

Description: Sets up network policy to be used with Azure CNI. Network policy allows us to control the traffic flow between pods. Currently supported values are calico and azure. Changing this forces a new resource to be created.

Type: string

Default: "azure"

rbac_aad_admin_group_object_ids

Description: Object IDs of groups with administrator access to the cluster.

Type: list(string)

Default: null

tags

Description: Any tags that should be present on the AKS cluster resources.

Type: map(string)

Default: {}

agents_labels

Description: A map of Kubernetes labels which should be applied to nodes in the default node pool. Changing this forces a new resource to be created.

Type: map(string)

Default: {}

agents_size

Description: The default virtual machine size for the Kubernetes agents. Changing this without specifying var.temporary_name_for_rotation forces a new resource to be created.

Type: string

Default: "Standard_D2s_v3"

agents_count

Description: The number of nodes that should exist in the default node pool.

Type: number

Default: 3

agents_max_pods

Description: The maximum number of pods that can run on each agent. Changing this forces a new resource to be created.

Type: number

Default: null

agents_pool_max_surge

Description: The maximum number or percentage of nodes which will be added to the default node pool size during an upgrade.

Type: string

Default: "10%"

temporary_name_for_rotation

Description: Specifies the name of the temporary node pool used to cycle the default node pool for VM resizing. The var.agents_size is no longer ForceNew and can be resized by specifying temporary_name_for_rotation.

Type: string

Default: null

orchestrator_version

Description: The Kubernetes version to use for the default node pool. If undefined, defaults to the most recent version available on Azure.

Type: string

Default: null

os_disk_size_gb

Description: Disk size for default node pool nodes in GBs. The disk type created is by default Managed.

Type: number

Default: 50

node_pools

Description: A map of node pools that need to be created and attached on the Kubernetes cluster. The key of the map can be the name of the node pool, and the key must be a static string. The required value for the map is a node_pool block as defined in the variable of the same name present in the original module, available here.

Type: any

Default: {}

Outputs

The following outputs are exported:

cluster_name

Description: Name of the AKS cluster.

base_domain

Description: The base domain for the cluster.

cluster_oidc_issuer_url

Description: The URL on the EKS cluster for the OpenID Connect identity provider

node_resource_group_name

Description: The name of the resource group in which the cluster was created.

kubernetes_host

Description: Endpoint for your Kubernetes API server.

kubernetes_username

Description: Username for Kubernetes basic auth.

kubernetes_password

Description: Password for Kubernetes basic auth.

kubernetes_cluster_ca_certificate

Description: Certificate data required to communicate with the cluster.

kubernetes_client_key

Description: Certificate Client Key required to communicate with the cluster.

kubernetes_client_certificate

Description: Certificate Client Certificate required to communicate with the cluster.

Reference in table format

Show tables

= Requirements

| Name | Version |

|---|---|

>= 3.81.0 |

= Providers

| Name | Version |

|---|---|

>= 3.81.0 |

= Modules

| Name | Source | Version |

|---|---|---|

Azure/aks/azurerm |

~> 7.0 |

= Resources

| Name | Type |

|---|---|

resource |

|

resource |

|

resource |

|

data source |

= Inputs

| Name | Description | Type | Default | Required |

|---|---|---|---|---|

The name of the Kubernetes cluster to create. |

|

n/a |

yes |

|

The base domain used for Ingresses. If not provided, nip.io will be used taking the NLB IP address. |

|

n/a |

yes |

|

The location where the Kubernetes cluster will be created along side with it’s own resource group and associated resources. |

|

n/a |

yes |

|

The name of the common resource group (for example, where the virtual network and the DNS zone resides). |

|

n/a |

yes |

|

The name of the resource group which contains the DNS zone for the base domain. |

|

|

no |

|

The SKU Tier that should be used for this Kubernetes Cluster. Possible values are |

|

|

no |

|

The Kubernetes version to use on the control-plane. |

|

|

no |

|

The upgrade channel for this Kubernetes Cluster. Possible values are |

|

|

no |

|

Maintenance window configuration of the managed cluster. Only has an effect if the automatic upgrades are enabled using the variable |

|

|

no |

|

The upgrade channel for this Kubernetes Cluster Nodes' OS Image. Possible values are |

|

|

no |

|

Maintenance window configuration for this Kubernetes Cluster Nodes' OS Image. Only has an effect if the automatic upgrades are enabled using the variable |

|

|

no |

|

The name of the virtual network where to deploy the cluster. |

|

n/a |

yes |

|

The subnet CIDR where to deploy the cluster, included in the virtual network created. |

|

n/a |

yes |

|

Sets up network policy to be used with Azure CNI. Network policy allows us to control the traffic flow between pods. Currently supported values are |

|

|

no |

|

Object IDs of groups with administrator access to the cluster. |

|

|

no |

|

Any tags that should be present on the AKS cluster resources. |

|

|

no |

|

The default Azure AKS node pool name. |

|

|

no |

|

A map of Kubernetes labels which should be applied to nodes in the default node pool. Changing this forces a new resource to be created. |

|

|

no |

|

The default virtual machine size for the Kubernetes agents. Changing this without specifying |

|

|

no |

|

The number of nodes that should exist in the default node pool. |

|

|

no |

|

The maximum number of pods that can run on each agent. Changing this forces a new resource to be created. |

|

|

no |

|

The maximum number or percentage of nodes which will be added to the default node pool size during an upgrade. |

|

|

no |

|

Specifies the name of the temporary node pool used to cycle the default node pool for VM resizing. The |

|

|

no |

|

The Kubernetes version to use for the default node pool. If undefined, defaults to the most recent version available on Azure. |

|

|

no |

|

Disk size for default node pool nodes in GBs. The disk type created is by default |

|

|

no |

|

A map of node pools that need to be created and attached on the Kubernetes cluster. The key of the map can be the name of the node pool, and the key must be a static string. The required value for the map is a |

|

|

no |

= Outputs

| Name | Description |

|---|---|

Name of the AKS cluster. |

|

The base domain for the cluster. |

|

The URL on the EKS cluster for the OpenID Connect identity provider |

|

The name of the resource group in which the cluster was created. |

|

Endpoint for your Kubernetes API server. |

|

Username for Kubernetes basic auth. |

|

Password for Kubernetes basic auth. |

|

Certificate data required to communicate with the cluster. |

|

Certificate Client Key required to communicate with the cluster. |

|

Certificate Client Certificate required to communicate with the cluster. |